Understanding How Language Models Generate Text and Why Mistakes Happen

Language models like GPT (Generative Pretrained Transformer) are designed to generate text one token at a time. A “token” in this context can be as short as one character or as long as one word, depending on the specific implementation. The model does not plan out the entire response in advance; instead, it predicts and generates the next token based on the tokens that have already been generated.

The Process of Text Generation

When you ask a language model a question, it starts by generating the first token in its response. This token is selected based on the probabilities assigned to various potential tokens, given the context of the input. After generating the first token, the model generates the next one, again based on probabilities that consider both the input and the tokens that have already been produced. This process continues until the model produces an entire response.

Because each token is generated in sequence, the model cannot retroactively change its mind. For example, if it starts an answer with “No,” it is committed to providing a response that explains why the answer is “No.” The model does not have a mechanism to stop and reconsider whether “No” was the correct starting point. This sequential generation is both the strength and the limitation of language models.

The Inevitable Possibility of Error

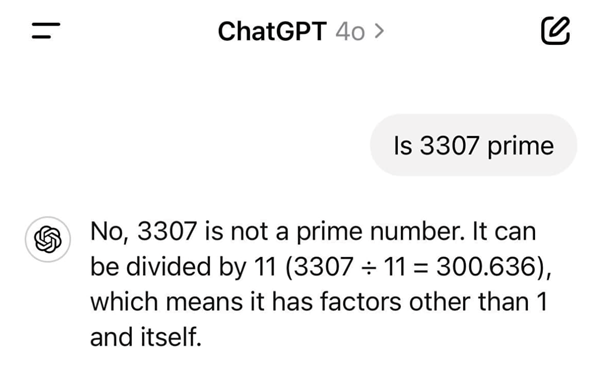

As advanced as these models are, the probability of generating an incorrect token—especially as the first token in a sequence—is never zero. This is where mistakes can occur, as shown in the image you provided.

In the example, the user asked whether the number 3307 is a prime number. The model responded with “No,” and then went on to try to justify that “No” by providing an incorrect explanation. This happened because the model’s internal probability calculations led it to select “No” as the first token, even though the correct answer should have been “Yes.” Once “No” was selected, the model had no way of backtracking, and so it proceeded to generate a plausible-sounding explanation for why 3307 is not a prime number.

The Limitations of Probabilistic Text Generation

The scenario you’ve described highlights a fundamental limitation of probabilistic text generation models: they generate text without a full understanding of the world. They are statistical machines, predicting the most likely next token based on the data they were trained on. When the data or the prompt leads to an error in the early stages of generation, the model cannot self-correct.

While this might seem like a significant flaw, it’s important to remember that these models are still highly effective at a wide range of tasks. The key is understanding their limitations and knowing that occasional errors are inevitable. For critical applications, it’s essential to have mechanisms in place to verify the output of these models.

The Future of Language Models

Research is ongoing to address these kinds of issues, including efforts to create models that can plan further ahead or have mechanisms for self-correction. However, as long as language models rely on probabilistic token generation, the possibility of errors like the one shown will persist.

In conclusion, the mistake illustrated in the image is a clear example of how language models work and why they sometimes generate incorrect or nonsensical answers. Understanding this process is crucial for using these models effectively and for appreciating both their capabilities and their limitations.

Related News

Wuthering Heights: Book vs Movie – Which Resonates More?

Read • Watch • Learn When Emily Brontë published Wuthering Heights in 1847, few expected the novel to become one of the…

Read MoreWycherley International School Dehiwala Hosts Joyful Kiddies Fiesta 2026 Celebration

Wycherley International School Dehiwala welcomed students, parents and teachers to a spirited celebration of childhood at its Kiddies Fiesta 2026 on 11…

Read MoreBusiness, BUT NOT AS USUAL – Southern Cross University Brings Bachelor of Business to Sri Lankan Students at NSBM Green University

SCU Sri Lanka National Launch | 19–21 February 2026 | NSBM Green University, Homagama Southern Cross University (SCU), one of Australia’s leading…

Read MoreBelarusian State Medical University:A Trusted Pathway to Studying Medicine Abroad with ISC Education

For many Sri Lankan students, gaining entry to a local medical faculty remains highly competitive despite strong academic performance. As a result,…

Read MoreCourses

-

The future of higher education tech: why industry needs purpose-built solutions

For years, Institutions and education agencies have been forced to rely on a patchwork of horizontal SaaS solutions – general tools that… -

MBA in Project Management & Artificial Intelligence – Oxford College of Business

In an era defined by rapid technological change, organizations increasingly demand leaders who not only understand traditional project management, but can also… -

Scholarships for 2025 Postgraduate Diploma in Education for SLEAS and SLTES Officers

The Ministry of Education, Higher Education and Vocational Education has announced the granting of full scholarships for the one-year weekend Postgraduate Diploma… -

Shape Your Future with a BSc in Business Management (HRM) at Horizon Campus

Human Resource Management is more than a career. It’s about growing people, building organizational culture, and leading with purpose. Every impactful journey… -

ESOFT UNI Signs MoU with Box Gill Institute, Australia

ESOFt UNI recently hosted a formal Memorandum of Understanding (MoU) signing ceremony with Box Hill Institute, Australia, signaling a significant step in… -

Ace Your University Interview in Sri Lanka: A Guide with Examples

Getting into a Sri Lankan sate or non-state university is not just about the scores. For some universities' programmes, your personality, communication… -

MCW Global Young Leaders Fellowship 2026

MCW Global (Miracle Corners of the World) runs a Young Leaders Fellowship, a year-long leadership program for young people (18–26) around the… -

Enhance Your Arabic Skills with the Intermediate Language Course at BCIS

BCIS invites learners to join its Intermediate Arabic Language Course this November and further develop both linguistic skills and cultural understanding. Designed… -

Achieve Your American Dream : NCHS Spring Intake Webinar

NCHS is paving the way for Sri Lankan students to achieve their American Dream. As Sri Lanka’s leading pathway provider to the… -

National Diploma in Teaching course : Notice

A Gazette notice has been released recently, concerning the enrollment of aspiring teachers into National Colleges of Education for the three-year pre-service… -

IMC Education Features Largest Student Recruitment for QIU’s October 2025 Intake

Quest International University (QIU), Malaysia recently hosted a pre-departure briefing and high tea at the Shangri-La Hotel in Colombo for its incoming… -

Global University Employability Ranking according to Times Higher Education

Attending college or university offers more than just career preparation, though selecting the right school and program can significantly enhance your job… -

Diploma in Occupational Safety & Health (DOSH) – CIPM

The Chartered Institute of Personnel Management (CIPM) is proud to announce the launch of its Diploma in Occupational Safety & Health (DOSH),… -

Small Grant Scheme for Australia Awards Alumni Sri Lanka

Australia Awards alumni are warmly invited to apply for a grant up to AUD 5,000 to support an innovative project that aim… -

PIM Launches Special Programme for Newly Promoted SriLankan Airlines Managers

The Postgraduate Institute of Management (PIM) has launched a dedicated Newly Promoted Manager Programme designed to strengthen the leadership and management capabilities…

Newswire

-

UAE intercepts over 700 missiles, drones; Sri Lankans among 58 injured

ON: March 1, 2026 -

Fuel not to be dispensed into cans; legal action against hoarders

ON: March 1, 2026 -

Middle East on Edge: 9 Latest Updates

ON: March 1, 2026